Artificial intelligence: Wherever you go, there you are.

“Wherever you go, there you are.”

Years ago, I had the privilege of hearing Julie Cross speak.

If you’ve ever seen her, you’ll know the kind of impact she makes.

You’ll likely leave feeling emotionally drained and impossibly inspired in equal measure.

The line above in particular has stayed with me ever since, for a few reasons.

This is a longer piece that I’ve been sitting on for a little while now, so I’ll get into Julie and why she’s on my mind a little later on.

But lately, I’ve been thinking about her line in the context of artificial intelligence.

Primarily because one of them called me the other day.

An LLM system.

And if I’m honest, it took me a second to realise I wasn’t talking to a human.

It was smooth, mostly. Polite. Just part of a workflow.

The tone was measured, the responses were accurate enough.

But something still felt a little off.

Like talking to a mirror that knew what to say, but not when to say it. Or why it mattered.

It wasn’t bad.

But it wasn’t human

I thought about hanging up, but I stayed on the line for a bit out of curiosity.

Because the AI conversation is loud right now.

Louder than it’s ever been.

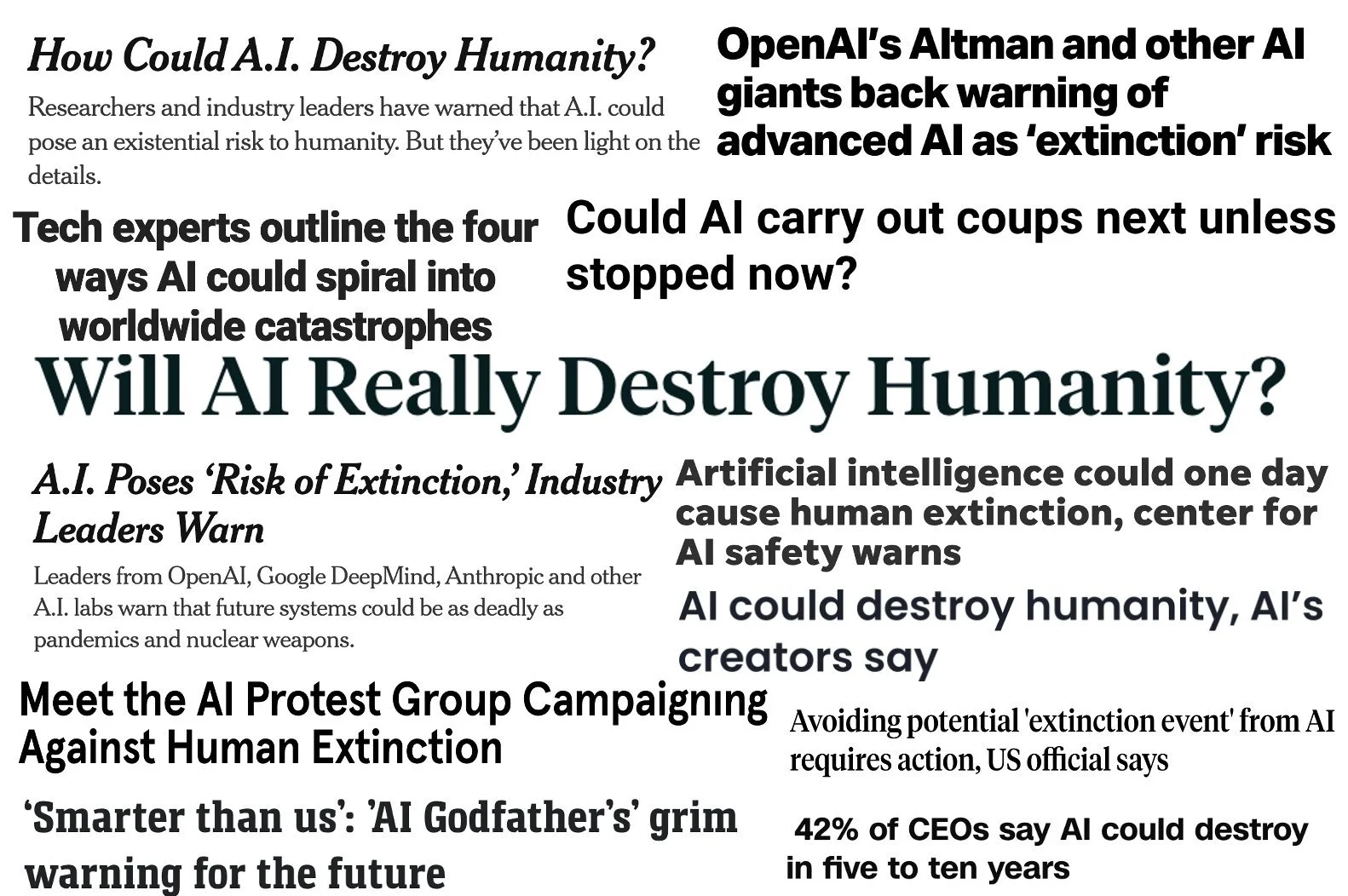

Credit: Dr. Casey Fiesler.

I hear from a lot of people who are worried for their businesses, or their jobs.

And just as many who are excited.

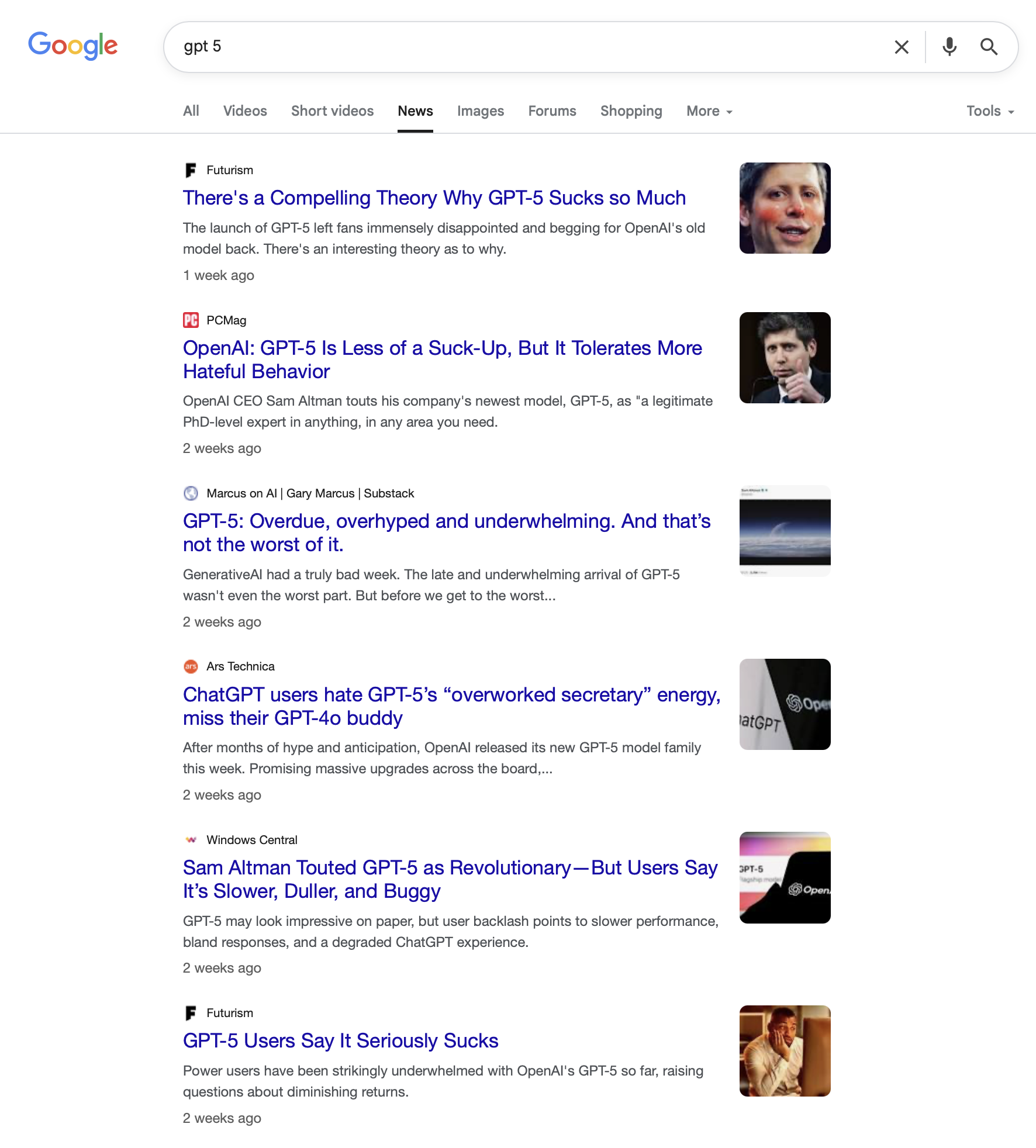

But the release of GPT-5 has sparked just as much criticism as it has curiosity.

For many, it’s fallen short of the hype.

If you’re familiar with the space, you’ll probably agree that it’s as much to do with scaling laws plateauing (for now) as it is to do with the monumental marketing efforts that went into promising something that wasn’t yet real.

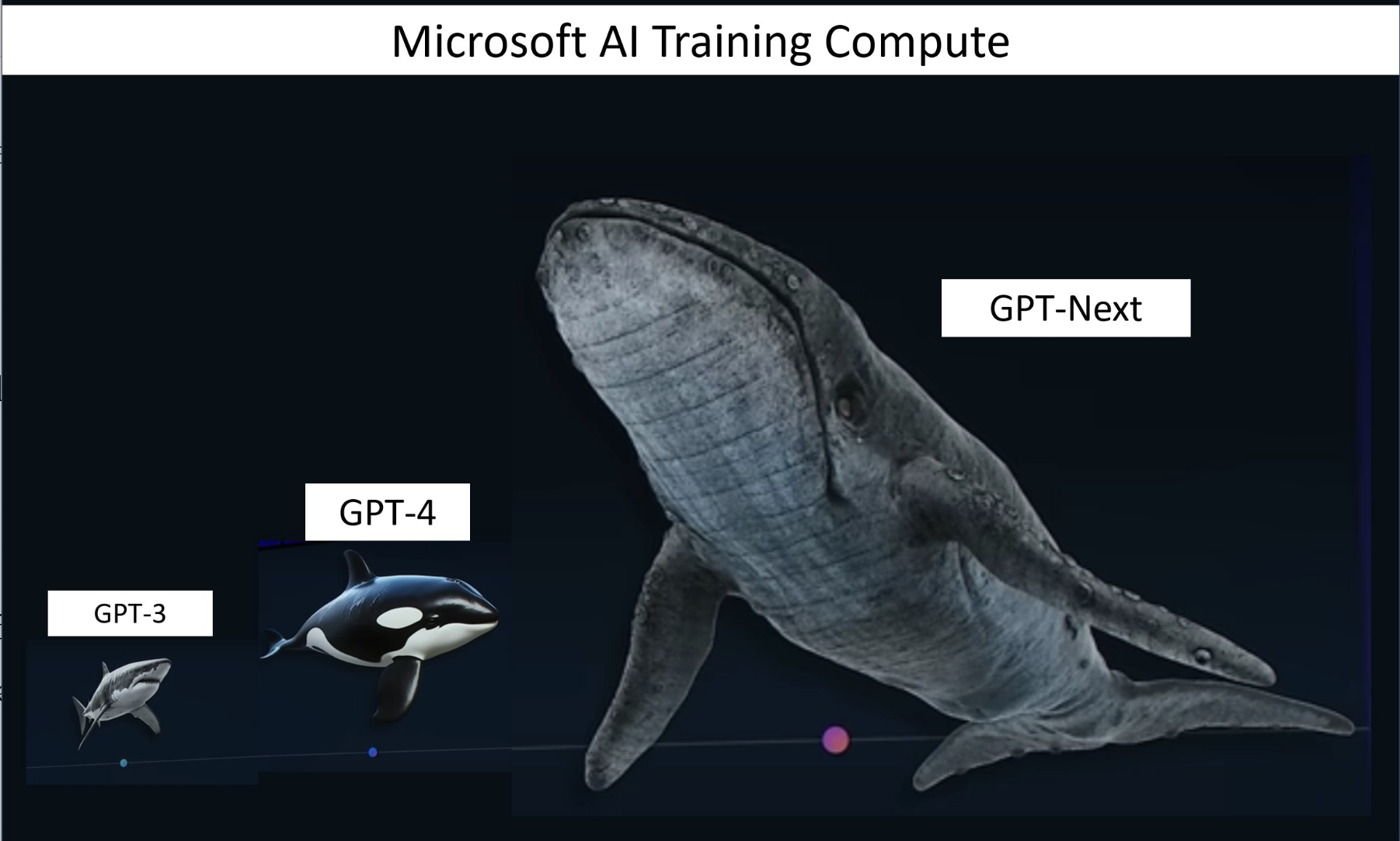

About a year ago, Microsoft compared GPT-3 to a shark and the just-released GPT-4 to an orca.

Considering the jump from GPT-3 to GPT-4, it was a leap that, while lacking concrete metrics, a lot of people said felt right at the time.

In that same presentation, they then suggested GPT-5 (then called GPT-Next) would be a whale, and they had all three of them lined up on screen.

No metrics, no real numbers.

Just a 200-or-so kilogram shark, a ~5000kg orca, and then a whale. I had to look this up, but humpback whales weigh around 30,000kg.

Source: Microsoft CTO Kevin Scott’s presentation on AI scaling.

It was in many ways a clever bit of PR.

You didn’t need to be an AI researcher or a marine biologist to get the message.

They were predicting a level of intelligence an order of magnitude beyond what we’d seen so far.

But over the past few weeks, after the final release of GPT-5, that same comparison has resurfaced.

It’s been mocked relentlessly.

To be fair, it’s easy to poke fun with the benefit of a year of hindsight.

But even then, just hours before GPT-5’s launch, the CEO of OpenAI had been posting cryptic messages online, including an image of Star Wars’ Death Star rising over Earth.

Source: Social media platform, X; CEO of OpenAI, Sam Altman.

Expectations were through the roof.

But the launch was far from a whale, and even further from a Death Star.

If you’ve used it, you may have found it to barely be an improvement over the GPT-4 level of models.

Potentially a downgrade, if today’s Google News results are anything to go off.

Source: Google, August 25

There’s been a lot said online by people who know this space better than I do.

The general view seems to be that we’ve hit a ceiling, or at least a slowdown.

The models are powerful, sure.

But they’re expensive to train, and to run.

Too expensive, maybe.

Most of these companies aren’t profitable, and from the outside, it’s hard to see a clear path that gets them there.

So unless something shifts dramatically, they’ll keep burning through billions each year.

Which means they’re still relying on venture capital, momentum, and a fair bit of hype just to stay afloat.

Whatever your take on that, it’s a reminder worth holding onto:

That gap between expectation and reality is where most of the value, or disappointment, tends to live.

In all honesty, I’m not really into tech.

My iPhone is a couple years old.

My laptop just died after a decade of loyal service.

And I certainly don’t pretend to be an expert in LLMs, transformer tech, or ML architecture.

Not because I don’t find it interesting. I do.

But my main interest is in people.

Human capability.

Always has been.

Not just friends and family. But my team, my clients.

Watching them kick goals, move forward, evolve.

That’s the stuff that lights me up.

So I don’t lean into these conversations all that heavily or all that often.

I also don’t pretend to know everything about AI, and I certainly don’t pretend to know anything about where it’s all headed beyond a 5-year time horizon.

But as a business owner, I have a responsibility to look to the future as best as I can so that I can protect and serve the people who depend on me:

Vendors looking to take their next step.

Buyers trying to set down roots.

Investors trusting us with their life’s savings.

Tenants looking for somewhere to call home.

My own team, and their families.

Contractors, and theirs.

All of them.

Because of that responsibility, we’ve been watching this space for years.

We actually previewed a few tools built on early versions of transformer tech.

Long before OpenAI released ChatGPT to a public beta.

Long before Anthropic, Google, DeepSeek made their own public plays.

And long before there were a thousand GPT wrappers being sold as something they weren’t.

We passed on that suite of tooling at the time.

Not because the idea was bad; it wasn’t.

But back when it launched, the experience simply wasn’t good enough for our customers.

It might have saved us time.

But it could have cost us trust.

And there was data to back it up.

According to a study from Garter, which tracked sentiment of over 5,500 people:

“53% of customers would consider switching to a competitor if they found out a company was going to use AI for customer service.”

This is why we don’t implement something just because it’s new.

We implement it if it works, and only if the customer comes out in front.

That’s the litmus test.

Because in real estate, more than most industries, “trust” isn’t a word you can print on letterbox drops and signage, or render on your website, then call it a day.

Trust is the entire foundation of our product.

And every time you automate the wrong thing, or move too fast without thinking through the impact, you risk telling your customer:

You don’t matter enough for a human.

There’s a big difference between automating for efficiency and automating at the customer’s expense.

To be clear, I’m not against artificial intelligence. Not in the slightest.

It’s already making an improvement in our business by helping our team behind the scenes.

Taking rough meeting notes and structuring them.

Cleaning up reports.

Drafting spreadsheets based on our existing systems.

Enabling us to build new workflows and tools that were once cost prohibitive.

Supporting, not replacing.

It’s quiet, careful, and completely human-led.

And it leads to a person who can now focus more of their energy on you, because their admin just got easier.

But that only comes from integrating suitable elements of this technology into our current systems and values.

The front-end side of AI, in my opinion, needs to be handled with far more precision.

Because this is where a lot of businesses could go wrong.

They see cost savings, but not the consequences.

They outsource all customer comms to a chatbot, or a templated message system, but unfortunately, not all optimisations are designed for a better experience.

That’s why we ask a different question:

What do our clients actually want?

And what do they deserve?

Because there are plug-and-play platforms promising to “revolutionise your business” every other week.

And they might, just not in the way you’d hoped.

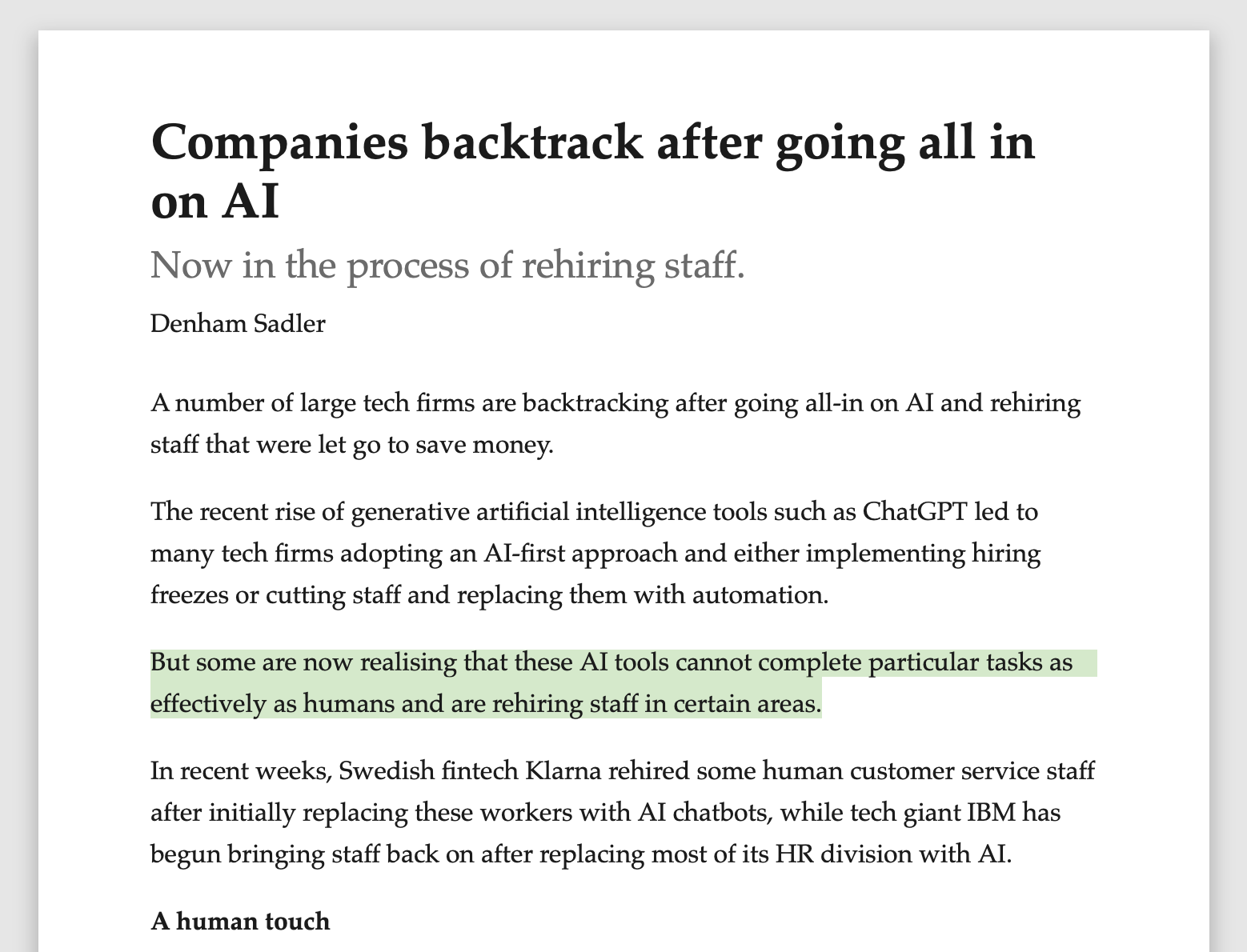

Just ask Klarna. Or IBM.

Between them, they laid off thousands of employees with plans to use AI to replace them.

Now in 2025, they’re both rehiring.

Credit: Denham Sadler, Information Age.

Or take a look at the report, published by top researchers at Apple.

It’s called the Illusion of Thinking.

Credit: Apple

“Through extensive experimentation across diverse puzzles, we show that frontier LRMs face a complete accuracy collapse beyond certain complexities. Their reasoning effort increases with problem complexity up to a point, then declines.”

Clearly, these models will improve.

The technology will improve.

But implemented poorly, these tools can break things.

Not just workflows:

Relationships.

Culture.

Trust.

So it’s not just customers I fear for this landscape.

It’s service providers, business owners, too.

The ones that are too overworked to know better.

Or too small to ignore the fear mongering.

To ignore the flood of marketers trying to sell shovels during what they see as a gold rush.

And on some level, I do believe that if you don’t adopt AI at all in the years ahead, there’s a very real chance you’ll fall behind.

I say that with a level of sad logic:

Even if only 15–20% of your competitors adopt AI for their workflows, over time, that efficiency compounds.

As more of them lean in, and customers have fewer options to avoid AI, the market will shift.

Competitors who’ve automated wisely will start undercutting you on price.

Not because their product is better.

Nor their service better.

But because their margins are lower.

Many businesses may be forced to adopt AI in certain areas, just to stay competitive.

Not to chase profits, but to protect quality.

To keep delivering a high standard at an affordable price.

To stay in business.

To look after our teams.

And the risk to our communities is real, too.

When good businesses step back, the remainder will fill the space.

And if those businesses care less about ethics, quality, or the people involved, then we all lose.

This is a very real scenario that plays out in every industry.

If you’re a business owner who genuinely cares, you’ll know what I mean.

There’s a different kind of responsibility here.

So I don’t think the answer is to sit back and say:

“I don’t like where this is going, so I’ll have nothing to do with it.”

Instead, maybe it’s:

“I don’t like where this is going. So I’m going to stay involved, and do it properly.”

Because someone’s going to use this tech in the wrong way.

Someone probably already is.

But that doesn’t mean you walk away.

If you care about people:

Your customers, your team, your product.

You hold the bar.

You get involved.

And there are people in my network, people I respect, trying to do just that.

In all honesty, I commend them.

They’re pushing the envelope with this technology.

Trying to build something.

And to do the right thing in the process.

And that, to me, is the real driver.

For business owners who care about doing things the right way.

Who take pride in their work and operate with integrity.

We need to hold the bar.

And to do that, we have to stay in the game.

Will this all look different in a decade?

Almost certainly.

But my hope is that I’ll be able to look back on this piece one day, and on this time in history, and know that we stayed grounded.

That we chose people.

And that we made decisions we could be proud of.

Because trends will shift.

Technology will leap ahead.

But trust is earned, not engineered.

And, to finally explain what I mentioned a couple thousand words above:

“Wherever you go, there you are.”

Julie Cross was talking about life.

About how, when we try to run from it, we carry our fears, our flaws, our integrity, into every new chapter.

But I think it applies here, too.

In the race to automate, shortcut, and optimise, the thing we keep forgetting is that there’s eventually a person on the other end.

Whether it’s a phone call, a live chat, or a house inspection:

At some point, your customer will meet you.

Or deal with you.

Or your product.

Or your team.

And that will still matter far more than how quickly you can get them there.

So at River, our position is clear:

We’re cautious, we’re optimistic.

And we’re determined to do what’s right.

Not anti-AI.

Nor AI-everything.

Just pro-human.

References: